In a world where smartphones serve as pacifiers and toddlers’ bedtime stories are generated by parents feeding prompts to ChatGPT, children are not just growing up; they’re booting up. But as we watch these young technology enthusiasts explore artificial intelligence (AI), are we inadvertently setting sail into uncharted waters?

Today, many kids can’t imagine a world without AI, and for a good reason. AI has seamlessly integrated into many facets of daily life, shaping the way we learn, play, and interact. For the younger generation, it has become an interactive companion and an integral part of their developmental environment. However, when considering the developmental impact of AI on children, it’s evident that their experiences have been redefined from previous norms. According to the American Academy of Child and Adolescent Psychiatry, children eight years of age and up spend a minimum of six hours a day “watching or using screens,” whereas teens spend “up to nine hours.” If children spend these whopping amounts of time on their devices, how much of it is also spent interacting, knowingly or unknowingly, with AI?

There are rising concerns about a potential overreliance on AI. There is a risk that children may prioritize AI-generated content over real-world experiences, which would severely harm their social and emotional development. Additionally, children are particularly vulnerable when it comes to privacy. At the moment, no regulations govern how AI platforms collect, store, and use children’s data.

Paulo Carvão, a Global Technology Executive and current senior fellow at the Harvard Advanced Leadership Initiative, told the Register Forum, “We need guardrails so that we continue to enjoy technology in a safe way. Children are at the front of this discussion. It’s very important that we’re helping children not only learn the technology but also develop their critical thinking.”

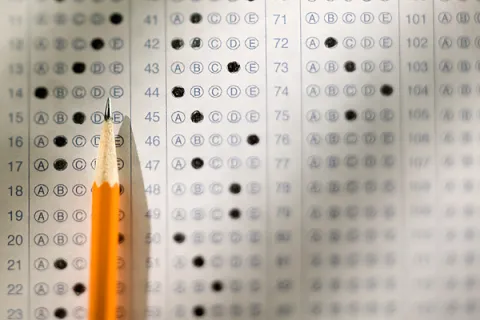

The use of generative AI for academics has also sparked debates in schools. With no clear guidelines, the first natural solution appears to be a ban. However, this is not a sufficient remedy. There is no way to fully banish access to generative AI for children. Instead, as Carvão tells the Register Forum, “Education could be an introductory space for a public option AI, AI developed by governments [that is] not necessarily based on profit interests.”

As state authorities struggle to harness the potential of AI to enhance learning in schools, only three states have provided guidance on appropriate use (Center on Reinventing Public Education). In Massachusetts’ case, it remains uncertain whether Governor Healey’s new $1.23 billion dollar initiative, the FutureTech Act, which aims to enhance the digital experience for Massachusetts residents by investing in emerging technologies and modernizing government IT systems for increased security, will address the challenge of integrating AI into the education system.

As we look toward the future, it becomes increasingly evident that the challenges posed by emerging technologies will only intensify. Unlike any child around us capable of using technology, generative AI is still in its infancy. In this era of unprecedented innovation, thoughtful consideration and proactive regulations are essential to ensure a harmonious incorporation of AI into our evolving world.